The tools for AI for architecture are just around the corner and will enable us to deliver spaces that perform better and are enjoyable to use.

When conversations about workplace and real estate turn to the disruptive potential of artificial intelligence, we sometimes offer a pithy response: it’s impossible to have artificial intelligence before one has regular intelligence. That is, one can’t hope to teach a machine to do things that are not yet well understood by flesh-and-blood humans. As we have learned more about cognition and psychology, the chasm between what we can observe and what is automatable actually feels wider, not narrower.

So, it is perhaps unsurprising that AI for architecture and design is still in the early stages of development. Despite quite a lot of pedagogy and thought leadership about design, we don’t really know how people do it—not in a linear, describable, procedural sense that a computer could understand. Computers can be given constraints and be programmed with aesthetic rules of thumb like the Golden Ratio, but these are merely human heuristics, not the capability to make actual judgements about quality.

Even so, the information that is already at our collective fingertips is more valuable than we may think. As we recently wrote for Corporate Real Estate Journal, a workplace is an environment full of tools that are data–enabled, or easily could be: secure access points, destination elevators, computers, mobile devices, and even smart appliances. Today’s tech-enabled workplaces now provide enough data to begin differentiating between human factors that are relatively consistent from place to place—for instance, the positive impact of access to daylight and a clear sense of prospect—and those that are particular to an individual environment and culture.

The ubiquity of low cost computing power and the availability of rich data sources have set the stage for a period of rapid innovation in smart offices. Innovators in programs like MITDesignX are continuing to explore the intersections between technology and design—their annual cohort regularly includes at least one AI-themed project. What shape might this all take in the near future?

Social data, the difference between math and AI

Compared to human awareness, much of what passes for AI these days is really just fancy math with a side of marketing. The exact dividing line between AI and regular computation is a fuzzy one. A fascinating piece by AI researcher Arend Hintze makes clear: if one thinks of AI on a scale ranging from digital alarm clock to self-aware machines that can run the world, we are still pretty far from the latter. Once one understands the way the algorithms are built, the artifice of intelligence often falls away.

Still, a smart speaker is qualitatively different from a calculator. By creating a program of sufficient complexity and giving it access to a rich collection of human-generated data, we have created a simulacrum of intelligence. While it may not be intelligent in the philosophical sense—it lacks free will or self-awareness—it exists in the space between a simple machine and consciousness, and it delivers value to the user.

What really defines artificial intelligence in the sense that people tend to use it may be the type of data that informs an algorithm’s decision-making. To simulate or augment human intelligence, machines must first be given human-centric data on which to operate and human criteria with which to evaluate it. As we like to say, smart buildings are social buildings.

On this front, there is good news, especially for the built environment. The measurements that we use in the architecture field are, at the root, human. We measure spaces in feet and count things in digits. The information gathered by our smart buildings—entry and exit, usage of spaces or amenities—is inherently tied to the human experience. Technology to collect this data is increasingly baked into buildings from the beginning of the design process. After-market solutions, like LMN Architect’s PODD, make it possible to retrofit existing spaces as well.

As these technologies continue to increase in sophistication, opportunities to gain insight will multiply. More consistent collection of data at all scales is needed to make smarter AI buildings possible. These tools will produce the pool of data that can make AI for the built environment a reality.

Typologies of AI for architecture

Already, there has been much progress in developing systems that supplement human intelligence in design. These efforts can be grouped into three broad categories: computational design, behavioral modeling, and responsive environments.

Iteration and filtering

In the years since Kasparov lost to Deep Blue, a consensus view emerged that the best possible chess player is neither human nor machine—it’s human and machine. By combining a human’s strategic instincts with a computer’s raw computational power, one could get the best of both. Machines are now emerging that can teach themselves to play. Despite its staggering mathematical complexity, chess is still a game with a finite end point and a clear set of rules.

Architecture is much more open-ended. One of the challenges inherent in much design work is that the decision space is so large as to seem practically infinite. Given a set of requirements, the number of possible designs is only limited by the designer’s time and willingness to experiment. What if they never discover the optimal solution? This is one area in which algorithms can shine.

Energy modeling was one of the first domains in which this kind of augmented intelligence saw widespread use. The cleverest human teams still strain to optimize the huge number of variables that can impact something like a building’s energy usage—e.g. the effect of the facade on the amount of natural lighting, costs and efficiency of various renewables based on historical weather patterns, the heat transfer functions of different construction materials, and more. In a computer-aided process, humans tell the computer what to optimize, then let the system evaluate thousands of options and spit out just four or five of the best for the people to choose between. In addition to prototyping the physics and appearance of a building, such models can be helpful in assessing the experiential aspects. Clients can even directly experience the environments through virtual reality.

Simulation and prediction

Collaborative work is essential to most businesses. Researchers and thought leaders have worked to quantify this impact and convince business leaders of its importance for years. Yet, to date there has been far less rigour applied to the practical development of more collaborative environments. It is a bit of an outlier in a culture of management that purports to value measurement and experimentation.

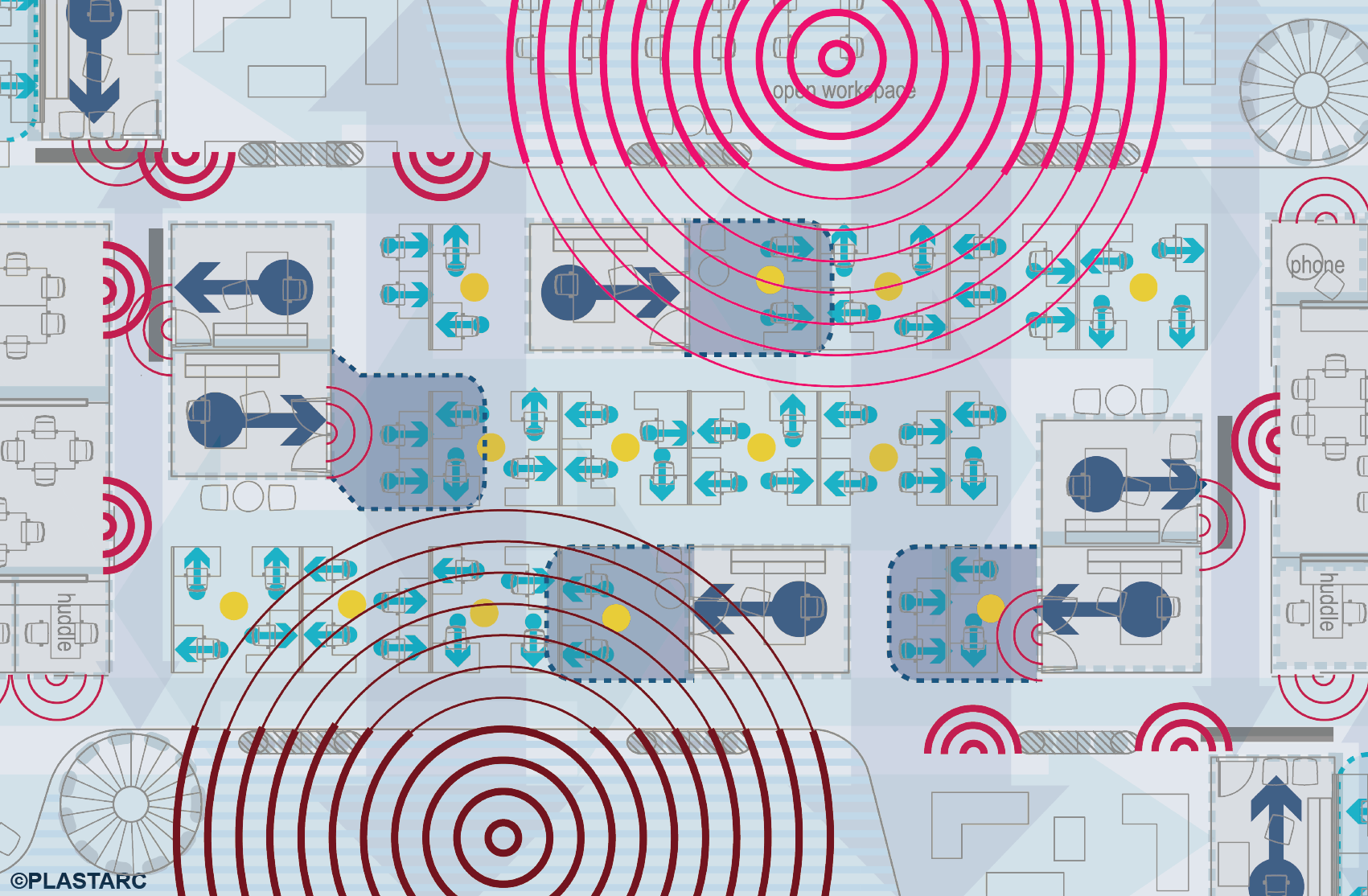

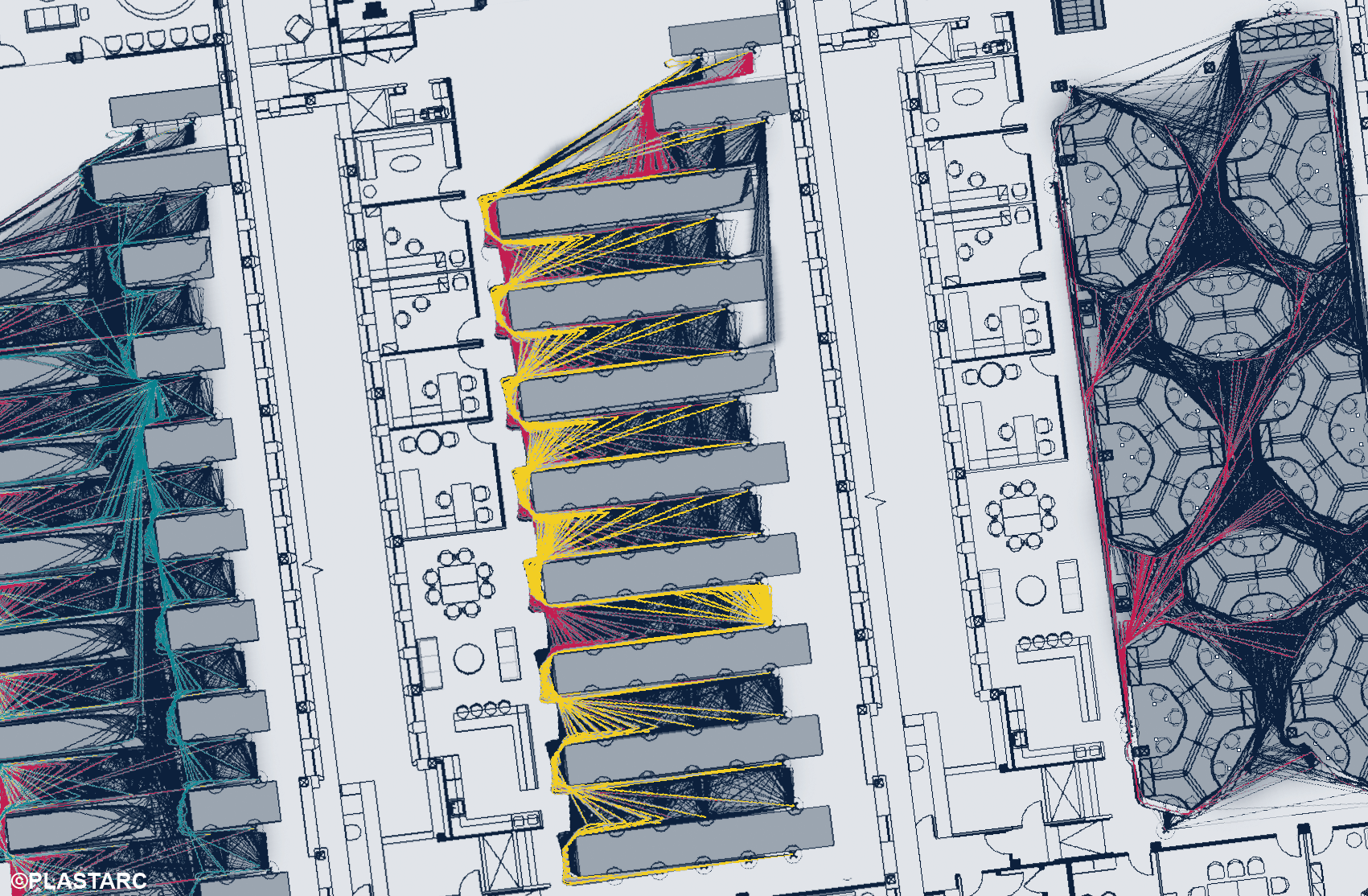

This is now changing. The effects of space on the behavior of occupants is now directly quantifiable, analyzable, and modelable. Crowd simulation software, which has historically been employed to evaluate emergency egress patterns in buildings, can simulate the behavior of crowds of dozens or even thousands of people in a given environment. Open source versions—for example, Vadere—allow anyone to experiment and repurpose these tools.

We use some of these tools in our work with our clients. For example, when a financial services firm was contemplating changes to the layout of their trading floor, we simulated all possible routes that people could take through the space depending on its configuration. Broadening such approaches to incorporate richer sources of social and behavioral data will allow for more nuanced modeling and decision-making.

Real-time data informs interactions

The next level of sophistication, which seems closer to what people mean when they say “AI”, is responsive spaces. Real-time data is already improving experience within curated hospitality environments like theme parks, shopping malls, and cruise ships.

It is entirely possible to extend similar approaches to any environment that can be connected to a single digital platform, even at urban scale. For example, imagine an integrated experience for the many restaurants in a busy destination like Times Square. Due to a variety of factors, one may be overflowing with customers while another is slow. Sensors could, without human intervention, detect or anticipate this overcrowding and make adjustments to encourage people to go to underused spaces. This might even include introducing a food or drink special to entice people and subtly rebalance the crowd.

Workplaces and buildings that use real-time data to adjust the experience for users already exist, and will become increasingly common and capable as the technologies become ubiquitous. Network effects also apply here. As more physical spaces are sensor-equipped, the value of each increases. When sensor data is combined with scheduling data, it can be used to optimize the use and comfort of spaces.

While AI for the workplace is not here yet, the investments that can enable it begin to recoup, nonetheless. Systems that collect the requisite data make their own business case; they make the experience of the environment better in the short term, all while collecting the data that will be useful down the road.

Synthesizing intelligence

Combining these different typologies results in an intelligent approach to design that optimizes from pre-design through occupancy.

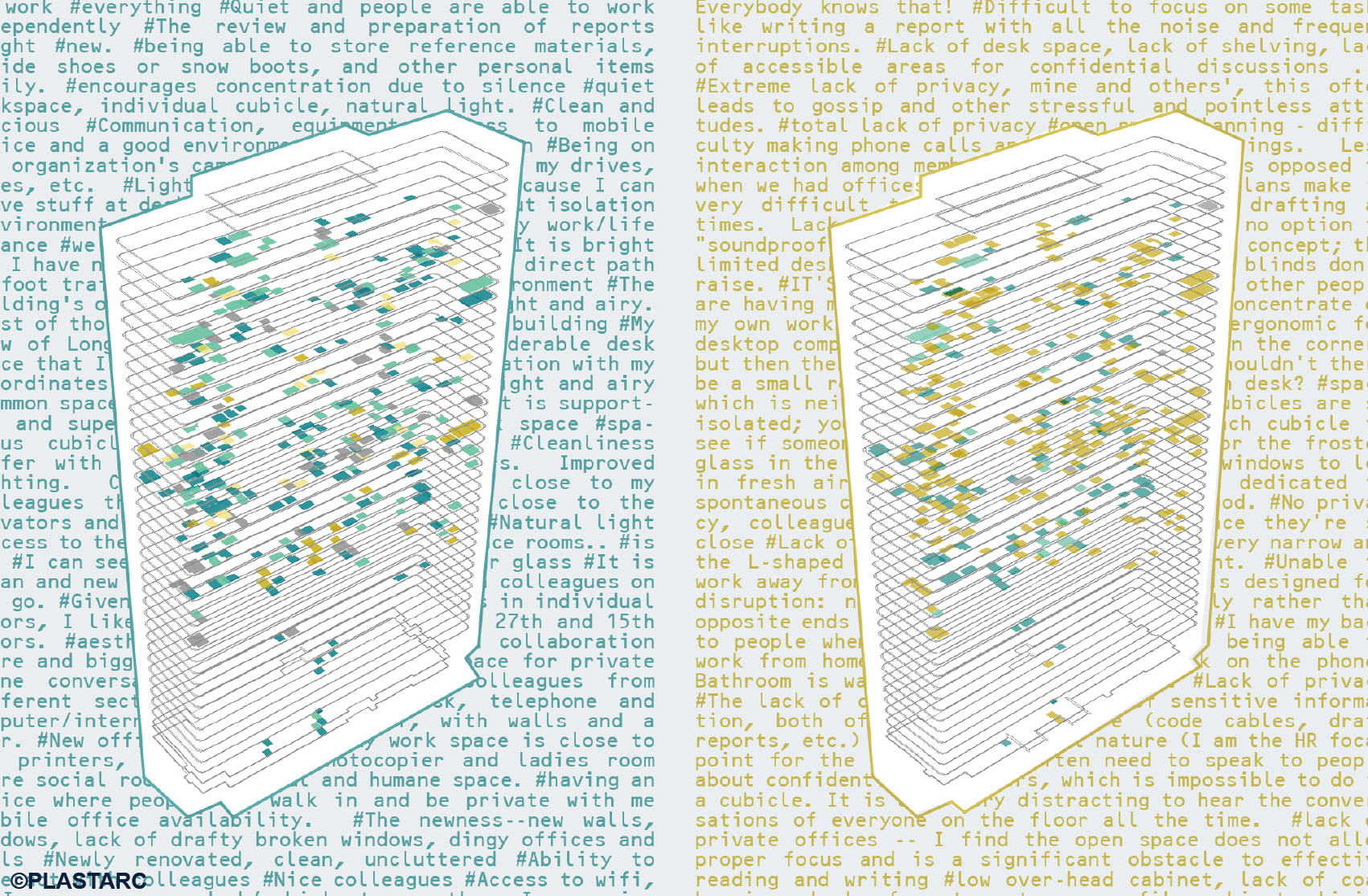

Imagine a building that is being constructed for an existing business. Before a single sketch has been drawn, the project team has already conducted detailed research of the existing building and its occupants. They have used what they learned to create a model of not just the building, but the individual users, including observations about their behavioral patterns and results of their engagement surveys. The design team can now simulate the social environment in the current or future space.

If, for example, the head of the IT department usually heads to the coffee bar after her first meeting of the day, that becomes an input into the model. Who might she bump into on the way? How does that change if the location of the coffee bar is changed? Which potential configurations deliver the most efficient use of her time and greatest boost to her job satisfaction? Such interaction modeling is not merely theoretical—it is a refinement of methods that already exist.

The value of such an approach is currently limited by the quality and quantity of input. The results of workplace research and people analytics projects can be used as inputs in these models. Environmental factors can also be incorporated. All of this data can be used to adjust the workplace experience in real time. For this to be possible, though, we need a more holistic view of what can be measured in the environment, with a commensurate shift in the approach to research and modeling.

For instance, if a simulation indicates that a number of people will tend to go to the cafe at a certain time, modeling can predict the change in noise levels both in the cafe (they go up) and in the work areas they are no longer occupying (they go down). How other people then respond to that change is another thing that can be simulated, accounting for their preferences. If the noisy cafe-goers are likely to distract people who are still working, white noise generators could be automatically activated. Or, if the goal is to get everyone to socialize more, environmental cues, mobile notifications to nearby users, or responsive signage can encourage everyone to join for lunch.

Many of these developments are still years or even decades away. However, the seeds for these developments in AI for architecture are being sown now. The examples offered here are not pie-in-the-sky. The fact that they can be envisioned as evolutions of current-day tools is evidence of the remarkable advances in measurement and analytical abilities in the last few years.

What we need now is more data—rigorous research on the full range of the human experience of the built environment. The data we collect and use today are the inputs that are needed to develop smarter algorithms tomorrow. As designers and real-estate professionals, our role is to discover what is of value to current and future occupants. The AI tools that are just around the corner will enable us to deliver spaces that perform better and are enjoyable to use.

This is the third installment in a multiyear conversation about the evolving role of data and technology in creating more people-centric design. We wrote about User Experience as a lens through which to revisit assumptions about design and create environments that enable performance and satisfaction. In our second, we discuss how Measuring Happiness—which is far more feasible than many people believe—offers new ways to explore the intersection of space and performance. Below, we discuss some near-future predictions based on our experience with clients.

[…] Sourced through Scoop.it from: http://www.workdesign.com […]